Converting between microwaves and light

Computers and networks today are hitting a wall. As demand for data and computation explodes, even the best chips waste too much energy moving information around – causing heat, emissions, and limits to performance. This problem is even worse for quantum technology, where energy loss blocks scaling and useful applications.

Light is an attractive way to move information: it’s fast, low-loss, and works at room temperature. But the most advanced processors – including quantum ones – use microwaves, not light. To connect them efficiently, we need to convert signals from microwaves to light.

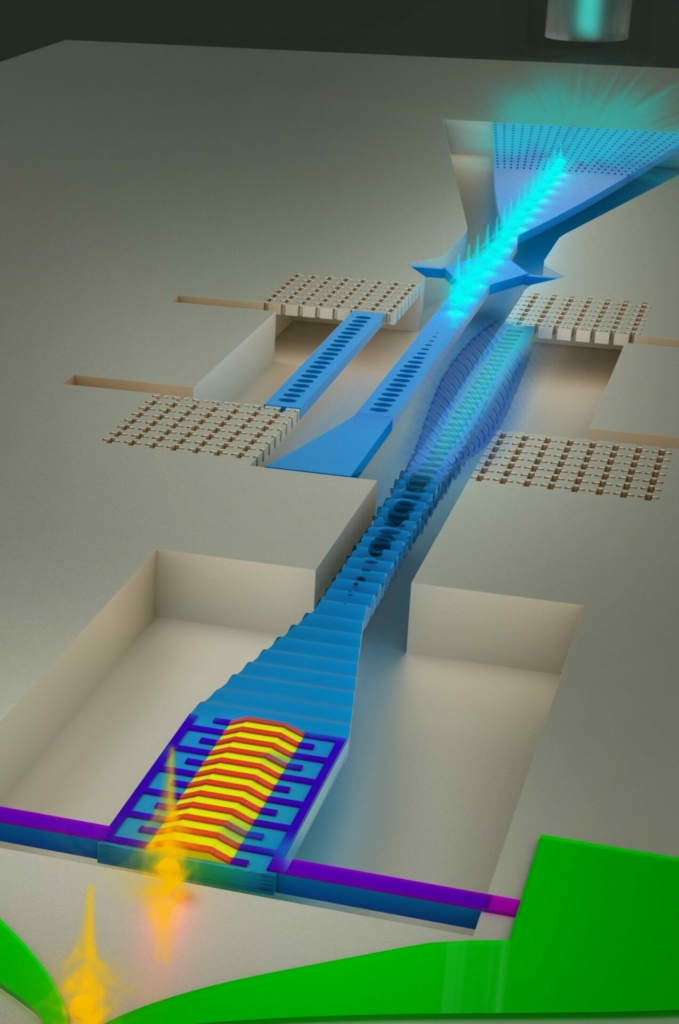

This is extremely hard because microwaves are about 10,000 times longer than light waves. We aim to solve this by using sound as a bridge between microwaves and light. Our research develops new hardware where microwaves become sound waves, and sound becomes light – without losing the delicate quantum information they carry.

These converters could eventually power low-energy data links in, for example, supercomputers for AI and in quantum sensors, communication systems, and computers. See also this research presentation, this article, and this article.

Processing light with sound on a chip

Light and gigahertz sound are perfectly matched to each other as both have wavelengths of around one micrometer in common materials like silicon. This enables them to interact strongly and thus sound can process electromagnetic signals with low power consumption. In one example this enables conversion between microwaves and light (see above).

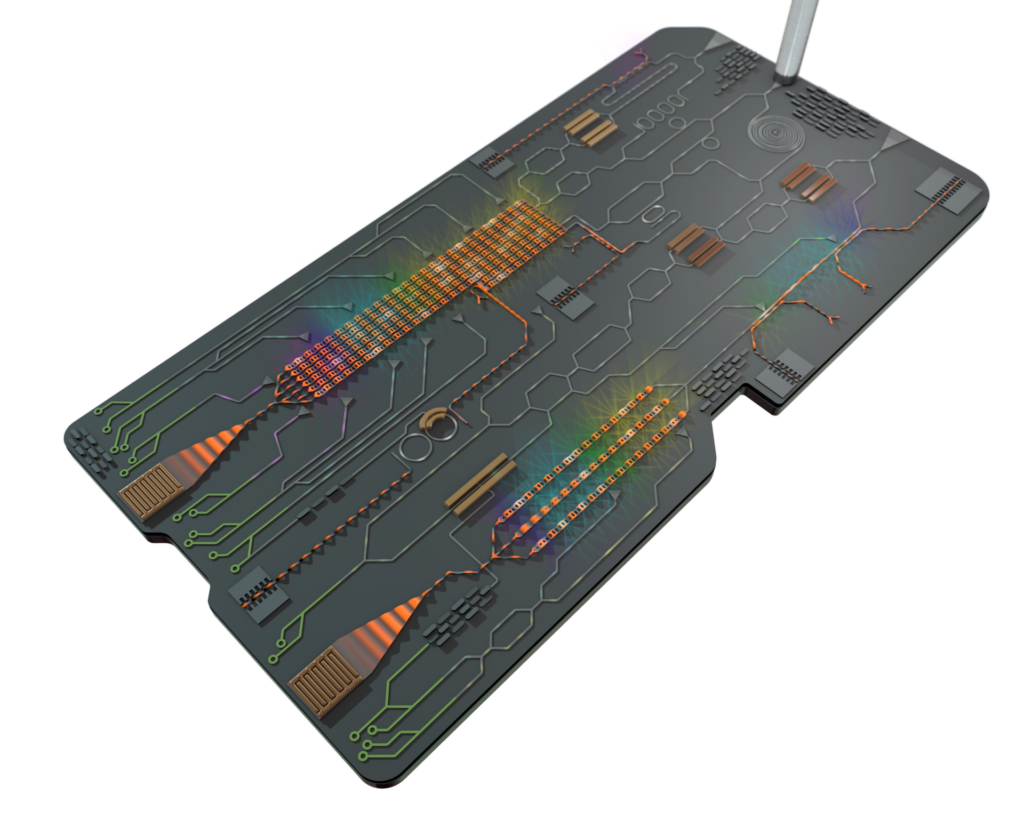

Other examples are classical electro-optic modulation, optical non-reciprocal elements like isolators and circulators, and optical beam-steering sensors. Such optical beam-steering sensors – called “lidars” – can exploit sound trapped on the surface of a silicon chip to efficiently steer light and make images. This is a much-needed function in autonomous systems like self-driving cars. It results in a vision (picture) where light and gigahertz sound are co-integrated on mass-manufacturable chips, enabling us to capture, analyze, and sculpt light both on and off the chip in new ways. Read more in this review.

Acoustic quantum processors

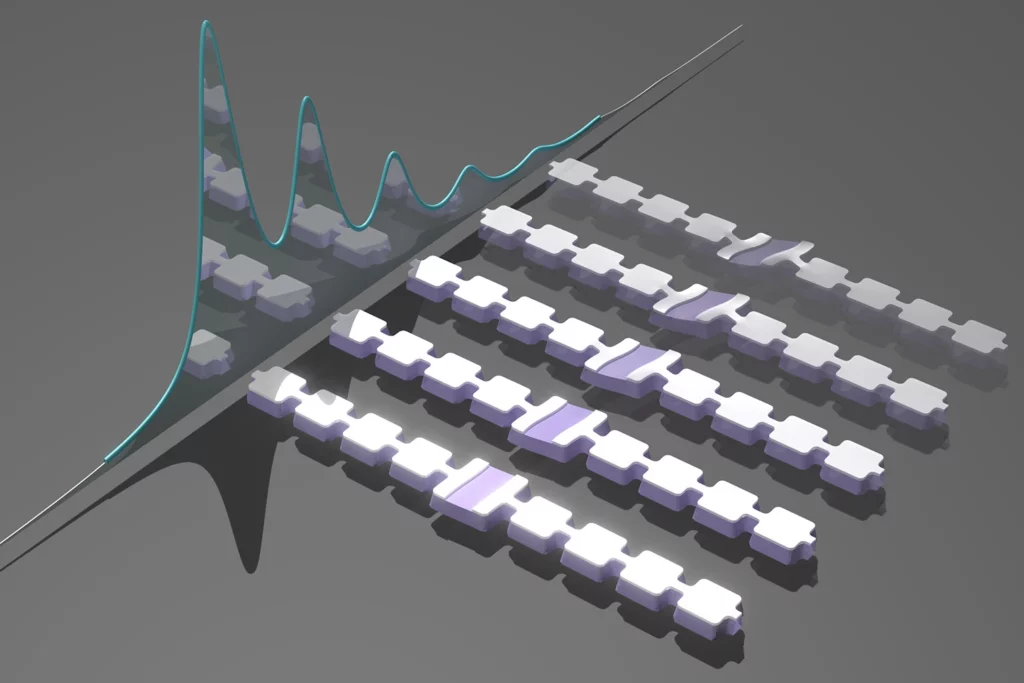

Like the microwave electromagnetic radiation used in mobile phones and wireless networks, acoustic devices can operate at gigahertz frequencies. However, acoustics offers unique advantages over microwaves. The acoustic wavelength is about five orders of magnitude smaller than the microwave wavelength at the same frequency – providing far more compact devices with reduced crosstalk. In addition, acoustic systems have superior coherence levels with lifetimes as high as seconds observed even in micron-scale phononic crystals. These acoustic systems can also be connected to superconducting qubits with strong interaction rates via piezoelectricity such that even individual phonons can be resolved (Image credit: Wentao Jiang). We aim to leverage these unique acoustic properties for quantum information processing. If the impressive acoustic coherence can be maintained with microwave control and read-out, it would usher in the arrival of new quantum sensors and memories – letting us scale up microwave quantum processors in a hardware-efficient way.